Luckily, Mark Matienzo inadvertently came to my rescue through his capacity as co-chair of the EAD roundtable. As you the reader may already know, UWM serves as the official repository for the Society of American Archivists' own archives, which includes the records of sections and roundtables. This year the EAD roundtable is revamping their website, but wanted to preserve their old EAD Help Pages as a historical record of the development of EAD and support for same. The roundtable was kind enough to turn these pages into static web documents and subsequently zip them off through the ether via Yale's file-share service... so here we are. A chance for a fresh start at ingesting records properly! Stuff of obvious historical value! A chance to use the tools on Chris Prom's submission page! Joy to the wor--

Ah. "Most of [these tools] cannot be implemented without significant work to integrate them into an overall workflow or other applications."

You mean you can't unpack the source code in which these are provided?

Hahahahahahaha No. We're still trying to get an OAIS-compliant repository up and running, and I certainly don't have the expertise to compile code for addons. We are just now starting to even UNDERSTAND what in the world OAIS is even talking about, much less have an OAIS-compliant repository. Not that it's out of reach for us-- again, Chris Prom's site makes the process seem much less daunting--but we're not there yet. Part of that process involves having a seamless submission process to the repository, and I don't have the technical expertise to implement any of the tools on the submission page. Oh well. It's still a cleaner process than what we were doing before.

One thing I DO have the expertise for is creating a rudimentary SIP. Check it out:

That's what I'm talking about, son. (Actually I had no idea what the requirements were even for this before I took the SAA workshop on Arrangement and Description, so please ignore me when I pretend to be an e-records thug.) In any case, you'll notice the folder for metadata, submission agreement (AKA "an email I saved from Thunderbird"), and two content folders-- an originals folder, which is not being touched from here on out, and a working copy, which is where I will practice my mangling of collections. (This collection sort of doesn't lend itself to much rearrangement, so I may choose another one when I get to that step.) I won't open any of the folders right now, especially not the "Metadata" folder, because there's not anything actually in there at the moment. Let's fix that, shall we?

Yikes. Not exactly the most human-readable document in the universe, though it could be OK if you have a way to crosswalk metadata. Let's see if we can do better. (This is, obviously, not the XML for the EAD pages.

Muuuuch better. The text file output is tab-delimited so you can easily import it into spreadsheets for even easier reading. The other information in the DDA XML file isn't there, but there is a function to count by type, which gives you more information about the accession as a whole. Unfortunately, the tool is a bit simple, which means you have to run each test separately. Hmm. Still wonder if we can do better.

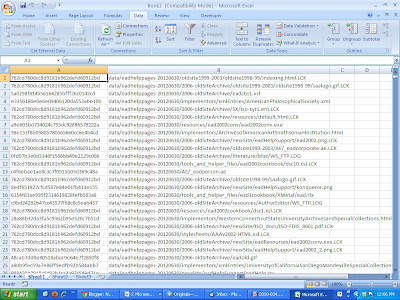

Another option is Karen's Directory Printer, which is unfortunately Windows-only, but does give you more information about each file in Tab-delimited format. Here's how that looks:

(I was going to show you this in excel format but as it turns out the delimiters are off and it doesn't import correctly. Awkward.)

Lastly, there's Bagger, a GUI for the Library of Congress' Bag-It Data packaging standard. Wrapping content in a Bag allows for easy transfer of materials-- potentially very useful if we ever move to a different repository space. I had to read through the manual for this one to see which components were necessary for the bag to be complete, but I eventually got there, and ended up with the following manifest:

Beautiful. Clean and human-readable, if needed. (Generally NOT needed because if you transfer bags the receiving computer can do the checksums by itself.)

So, which of these is going to go into our workflow? I'm leaning towards Bagger at the moment because it creates fixity checksums AND packages the data for ready transfer. (There's also a functionality there, "Holey Bag", which allows you to pull stuff in from the web-- I'll have to try that one.) NARA File Analyzer might be OK too for simplicity's sake. Of course, neither tool captures the metadata captured by the Duke Data Accessioner... but, as the man says, there's an app for that. Unfortunately, this post has already run long, so those will have to wait until next time.