|

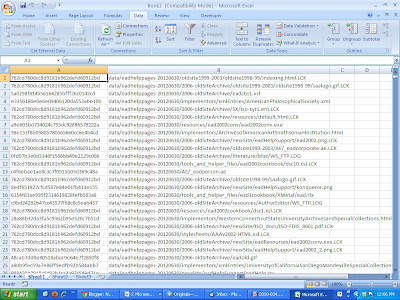

| God Bless Jimmy McMillan. Helping people make Image Macros since 2010. |

First, some context: this post is a response to comments in Maureen Callahan's

post on You Ought To Be Ashamed regarding gender equity in hiring and promotion in Archives environments. Things got out of hand, as they tend to do: One person posted about how he didn't see the Glass Elevator as a real thing, another posted about how Supply and Demand would naturally correct the Archives market, and a third dismissed people who were chasing their dreams in the Archives world with "Good luck with that." I, foolishly, observed that most of the people taking issue with part or all of Maureen's post were records managers as opposed to archivists, and notably were records managers working in the private sector. Well, the foolish part was not that observation. The foolish part was my next logical leap, in which I tried to explain why the mindsets might be different:

Although officially I am both an archivist and a records manager at UWM

(and am, you know, chair of SAA’s Records Management Roundtable), I feel

much more of an affinity towards my archival colleagues largely because

of this disconnect. The vast, vast majority of the ARMA programs I’ve

attended are really focused towards dealing with records in a private

sector environment, with perhaps some mention of government records and

records management in a university setting coming in a distant third, if

at all. This emphasis is natural on the face of it, since the

preponderance of ARMA members are corporate, but dig a little deeper and

you see structural issues: $175 for full membership, no gradations?

$50+ for downloadable standards? _$1000_ for registration for the

annual meeting? I don’t know many archivists/RMs in the public sector

who can afford this, much less afford membership in both ARMA and SAA,

and so they choose the one that is both cheaper and has a more direct

relevance to their professional development. Thus, the divide widens,

and groups like RMRT can only do so much to bridge it.

I even said on Twitter that I was going to be stepping in it with this post, but this is something that has been bothering me for some time and it felt good to get it off my chest. Still, this paragraph didn't go unchallenged. Here's Peter Kurilecz:

so are you suggesting that ARMA should have a progressive dues structure

like SAA? ie should they be like AIIM and have a name your price dues

structure? How many people drop their SAA membership as they climb the

salary ladder because they now have to pay more in dues? I took a look

at the membership breakdown for SAA and after some analysis found that

if they implemented a standard price ala ARMA (and not ARMA’s price)

that SAA would have increased dues receipts. It would also make more

sense from an accounting standpoint. I hear way too many folks whining

about the cost of membership, but how many of those same folks are

buying a Starbucks coffee everyday? Even a plain regular coffee at $2.50

for vente? figure the cost at that amount they’re paying $75 per month

or $900 per year. or do it just 15 days per month and they are paying

$450 a year. way more than membership.

how about a cell phone? what is the monthly cost? It all comes down to

what is important to you. If it is really important you’ll find a way to

pay for it.

I stand by my original comment, but this response suggests that I didn't do a wonderful job articulating what I meant. Let me take another whack at it.

Peter is, of course, right, that one's ability to afford membership in any of these professional organizations is a matter of priorities, and that if you want it badly enough you'll find the money. The thing is... I don't think ARMA does a good enough job making archivist/RM hybrids such as myself want it. Yes, there are and continue to be programs sponsored by ARMA and the locals that are interesting to archivists in the public sector, particularly at the government level. ARMA Milwaukee's Spring Seminar this year is on

"Information Governance and Records Management in the Federal Government", which is very obviously aimed at public sector archivists-- so I overstated my case on that. Mea Culpa. But I don't think I'm reaching at all to say that most programs that ARMA sponsored are focused towards a very particular organizational culture, the kind where buy-in is achieved at C-level positions and/or direct coordination with legal or audit departments. Corporate or Fiscal environments are very good at this; Government environments less so, but there's still enough to get a semblance of cooperation (Witness the Obama memo on electronic records, which agencies have to at least to pretend to follow).

My institution, conversely, is very much not like that. Getting the support of the CIO or the Provost does not at all guarantee that staff are going to follow appropriate records management practices, or adhere to taxonomies defined by upper management. Because I am in the Library rather than in Internal Audit or Legal Affairs, my power to enforce records management practices is limited to "soft power"-- going to individual offices and convincing them that establishing and following disposition schedules and records management policies is in their best interest from a legal, administrative, and historical standpoint. It's doable, but it's decentralized, and it can be very difficult, and I often have to operate on a shoestring budget (there's very little, if any, funding for a campus-wide EDMS, for example). Some of the programs and rhetoric at ARMA sessions and webinars acknowledge this difference in institutional culture and offer solutions for dealing with it. Many others do not.

In one sense, I don't blame ARMA or the locals for this focus on centralized culture, since most of their members come from that environment. But that also means that there is often not much incentive for archivists/records managers at institutions like mine to formally join ARMA and get the benefits that membership provides, because a lot of those benefits are just not as relevant as those found in other organizations, and in an era of shrinking personal and professional development budgets, sometimes a choice has to be made. If that choice is not in ARMA's favor, that reinforces ARMA's own incentive to cater to its existing members, program composition is altered, and a vicious cycle ensues.

It didn't get this way overnight, of course, and again, there is a LOT of good stuff to be had from ARMA even for university records managers such as myself. In my *opinion*-- I cannot emphasize enough that it is my opinion, because I have no concrete evidence-- a lot of it comes down to the money issue. To quote Peter again:

as for the cost of standards have you seen what ISO charges?. Should

not the university pay for those standards since you depend upon them

to do your work? do you not budget for the purchase of standards and

other reference materials.

as for the cost of the conference you failed to mention that early bird

registration gets a discount. What should the cost of the conference be?

$500, $750, $250, free? do you want the conference at a nice venue or

down market? price is but one factor

My own library pays for some-- by no means all-- of the ARMA standards. They are generally the ones that are directly relevant to my duties as University Records Officer, and they sort of look askance at me when I order them, because in general the analogous documents on the Archives side of my job are significantly less expensive. Speaking of which, I cannot envision a world in which the UWM Libraries would pay for me to attend the ARMA annual conference. In this world of budget cuts and shrinking travel budgets, their question would probably be the same as mine, to wit "What makes registration for ARMA worth 3x as much as registration for SAA?" I don't have an answer to that, and because I can't afford the registration fee myself without institutional assistance, I likely never will while in this position. (Since it's in Chicago this year I do plan on making it down to the Expo, since the price is right for that.) I cannot imagine that I am the only university archivist to be in this position, and to me it seems emblematic of ARMA's structural focus issue-- a fee that high suggests to me that the board expects its members to be mostly or entirely comped by their institutions, which is going to happen less and less even for government RMs.

Again, I have nothing against ARMA. I've had a lapsed membership because of the brouhaha in WI affecting my take-home pay, but I'm hoping I can renew it soon because I actually do get a lot out of their publications and seminars. (I don't even necessarily agree with the graphic at the top of this post, though I do think that even token dues levels would be a sign of good faith on reaching out to underemployed or undercompensated RMs.) One day I WOULD like to run for an officer position, as Peter suggests (although I still feel too much like a young'un right now). SAA's Records Management Roundtable, of which I am chair this year, is as we speak working on some plans to reach out to ARMA and local chapters and look for ways that we can collaborate on education and advocacy, etc.

But there are many Archivists with RM portfolios in my position who look at what ARMA has to offer and say "why bother?" As a result, the organization loses those perspectives, and the gulf between the professions is maintained.